Why this legal team fixed their renewal workflow before fixing the tech

A practical look into buddy reviews, human-in-the-loop AI, and scalable contract review.

👋 Hey there, I’m Hadassah. Each month, I unpack how in-house legal teams use AI to enable the business, protect against risk, and free up time for the work they enjoy most—what works, what doesn’t, and the quick wins that make all the difference.

Before we dive in, a quick note: this is just one example of a legal team solving an operational bottleneck. There are plenty of ways to approach these kinds of problems, and the right solution will always depend on your specific needs and context. My goal is to give you some food for thought as you define what that solution should be.

Problem

When a company-wide restructure cut this legal team’s product and privacy counsel, a surge in vendor renewals exposed a critical capacity gap. As vendor renewals started coming in, the remaining team was swamped; no one had the bandwidth or expertise to review agreements that were quietly changing under their noses. The problem wasn’t one of legal complexity, but one of capacity and process.

Vendors were silently updating governing terms, introducing risks that were difficult to catch. That meant every renewal needed checking, not just signing. But with the GC already overloaded and no budget to increase headcount, reviews for even routine agreements took up to 24 hours. The absence of a system to triage and track renewals efficiently slowed internal processes and created friction with business teams who grew frustrated waiting for legal sign-off.

Solution

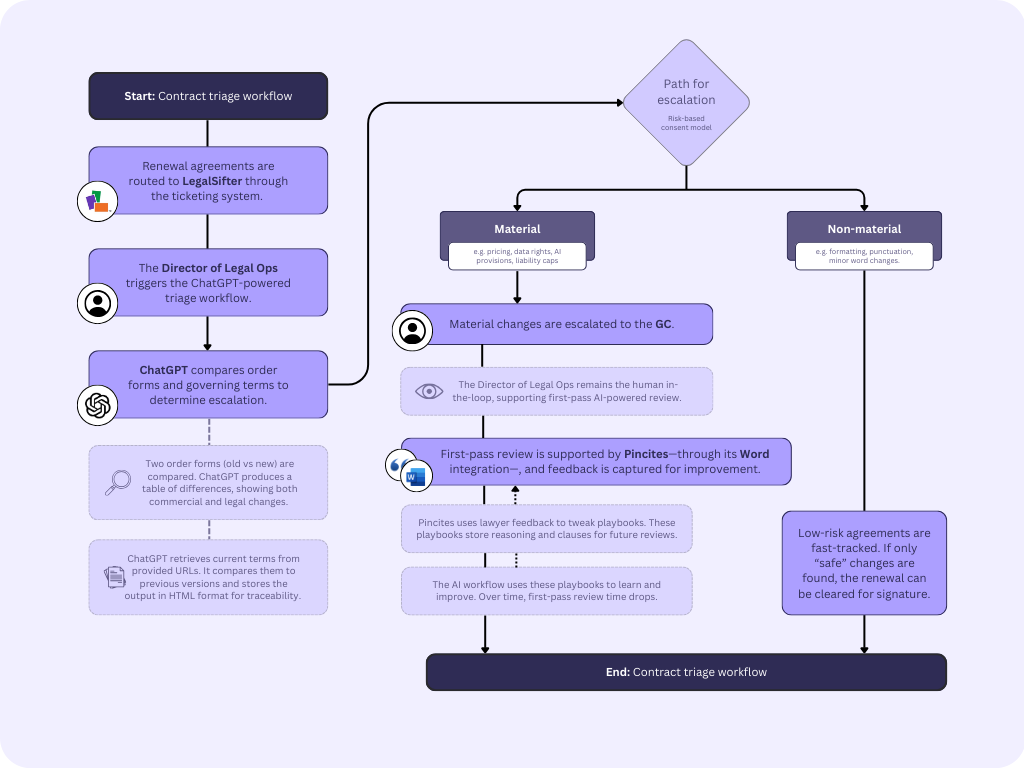

Joining us is the Director of Legal Ops, who was on a mission to cut review time, reduce risk, and keep the GC focused on high-value work. Their solution came in the form of a GPT-powered contract intake and triage workflow built on a layered stack of:

LegalSifter; and

Together, these tools automated low-risk vendor renewals, flagged meaningful changes, and routed only the riskiest ones to the legal team for first-pass review.

Let’s dig a little deeper: First, a distinction was made between new agreements (which would still go to the GC) and vendor renewals (non-revenue-generating work that would be managed through the new workflow). In practice, LegalSifter acted as the front door—the intake system and document repository. Renewal agreements arrived there through the team’s existing ticketing process.

From there, a ChatGPT-powered workflow:

Compared order forms and terms. Two order forms were uploaded, and ChatGPT generated a table of commercial and legal differences for instant visibility.

Scraped and stored online terms. ChatGPT pulled the latest vendor terms from the relevant URL, compared them to prior versions, and saved results in HTML for transparency and version tracking.

Identified material changes. The workflow focused on substantive differences—not cosmetic edits—flagging shifts affecting pricing, data use, or AI provisions while ignoring harmless formatting changes.

Escalated only what mattered. Meaningful changes, especially around liability or data use, were escalated to the GC, with the Director of Legal Ops acting as the human in the loop.

For version control and knowledge capture in escalated cases, Pincites was brought in through its Word plugin. It generated playbooks based on previous redlines and lawyer feedback from “buddy reviews,” ensuring the system continuously learned and improved.

Results

~90% of vendor renewals required no escalation, freeing the GC from nearly all routine reviews.

Review time dropped from 24 hours to just minutes for low-risk renewals.

The workflow created an auditable trail of term comparisons, giving the team confidence that silent vendor updates weren’t being missed.

First-pass AI review dropped from 90 minutes to 30 minutes, thanks to refinements in the company’s playbook and improved prompting using Pincites.

Process

Adoption was gradual. Some lawyers were eager to try the tool; others were cautious. Instead of pitching it as a major transformation, the Director of Legal Ops framed it as “human-validated AI,” starting with small, verifiable wins and letting early adopters influence their peers. This phased rollout became a quiet success factor, allowing adoption to spread organically.

As the workflow matured, the main challenge wasn’t technical but cultural. Pincites excelled at first-pass issue spotting, but getting lawyers to engage with how it worked required some “human engineering.” Senior lawyers struggled with the idea of teaching the system. Improving it meant adjusting the natural-language rules behind the output, not just editing the document. They preferred explaining their reasoning out loud rather than experimenting directly with the rule logic.

A “buddy review” process helped bridge this gap, with the Director of Legal Ops acting as the translator between human feedback and machine learning. The team could simply talk through contract issues, and their input was captured as shared contract logic reflecting both institutional knowledge and the system’s growing precision.

Trust was another hurdle. Some worried about data privacy and whether OpenAI could train on company data. Clear explanations about the privacy protections in the ChatGPT Enterprise environment and early demonstrations of accuracy shifted the conversation from “is this safe?” to “is this useful?”.

Finally, the technology had limits as well. Models occasionally hit token caps or over-summarised. The workaround was targeted prompt engineering: chunking inputs and outputs and avoiding summarisation. Not elegant, but effective.

Quick Wins

What made this work wasn’t a shiny new piece of tech. It was the way the team approached the problem as well as the messy middle—splitting up a convoluted workflow, matching user preferences, and putting technical limitations to the test. Getting a new workflow off the ground is rarely about one big moment of success. More often, it’s about the small, practical wins that build momentum and keep a project moving forward.

For this legal team, those wins looked like:

Targeted use case selection. The team began with a narrow, low-risk and low-value focus: vendor renewals. By training ChatGPT to flag only material changes, they minimised false positives and kept lawyers out of routine reviews. This precise scoping accelerated results and built early confidence.

Buddy reviews for lawyer engagement. Rather than asking lawyers to manually edit prompts or rule logic, “buddy reviews” allowed them to give verbal feedback, which was transcribed into the playbook logic. This reduced review time by two-thirds and secured buy-in without requiring anyone to learn the ins-and-outs of AI.

Prompt engineering for clarity and depth. To overcome token limits and over-summarisation, prompt engineering was used to force ChatGPT to chunk its input and output while avoiding summarisation. This ensured complete, accurate comparisons—even for long, complex agreements.

Now it’s your turn. If your team is dealing with something similar, I hope this story sparks a few practical ideas you can put to work.

And… if you’ve been through something similar—or solved a different operational challenge altogether—I’d love to hear your story and spotlight your win.